Fighting a Crisis with Better Data

The year 2020 gave the world a global crisis - a pandemic caused by a new coronavirus strain named SARS-CoV-2 or COVID-19. The world turned to defeat this crisis with a novel toolkit - data-driven services.

We saw the use of mobile phone location data to detect whether populations are following social distancing rules (USA, Estonia, Google). As a more targeted application, data was also used to check for individual quarantines (South Korea, Poland).

While some of these applications are based on consent from the data owner, others would need to get information about whole populations in order to be useful. It is this second group of applications that helps a government make decisions in a crisis. Should we enforce stronger lockdowns or are people following the rules anyway? Better data will help make better decisions, whether we are in a crisis or not.

How to make sure that a crisis-time data tool is dismantled after the crisis?

Usually, a service provider collects the data, stores and processes it. There are typically no technical limitations to what kind of processing is allowed. In some territories, data protection regulation like the European General Data Protection Regulation requires that the purpose and scope of processing are documented.

However, few if any organisations deploy monitoring or enforcement technology ensure that data collected for one purpose cannot be used for another. E.g., it is hard to convince a person or a company that data granted to an IT system during an emergency will not be used for something else once the crisis has passed.

To fully convince the data owner, we should be able to show that:

- the service provider cannot see the values in the database (cannot copy them for other uses);

- the service provider is technically incapable of performing other computations that the ones that the data owner agreed to when providing the data;

- there would be no single party who could overrule guarantees 1) and 2).

Cryptographic Secure Multi-Party Computation

Secure multi-party computation (MPC) is a technique for allowing multiple parties to compute a function so that each data owner sees its own inputs and nothing else (security).

It can be achieved by special types of encryption or hardware. For example, /homomorphic secret sharing/, /homomorphic encryption/ or /trusted execution environments/ (like the Intel(R) Software Guard eXtensions instruction set SGX).

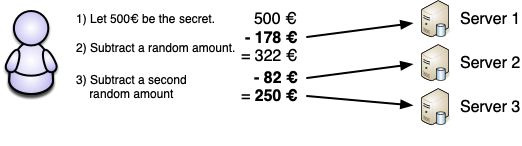

As an example, consider additive secret sharing on integer numbers. In order to protect a value, the data owner generates two random numbers and subtracts them from the secret (using modular arithmetic to avoid negative numbers). See the figure below for an example.

Assume that several data owners have shared their secret values into three random pieces as described and distributed the pieces between three servers. Now the servers can engage in protocols that calculate new shares for sums, products or more complex functions on the input shares. See the following video for an explanation.

Security of Secure MPC

We will explain the security guarantees based on Cybernetica's Sharemind MPC system.

Our first goal was that "the service provider cannot see the values". This is achieved by the randomness of the shares. Two of them are generated randomly and it is easy to argue that by subtracting a random value from another one, the result is random as well. And since each server sees only random values, they cannot reconstruct the secrets without getting the other shares. Thus, we have fulfilled our first goal.

/Bonus explanation for those who looked at the animated explainer - operations other than adding requires that the servers exchange messages. However, these protocols can be built in a way that prevents the servers from learning the secrets. You can find out more about this at the Sharemind MPC research page.

The second goal was to prevent the service provider from performing arbitrary computations. Sharemind MPC achieves this by having a list of allowed MPC programs at each server and not accepting any uploads of new programs. This means that unless all Sharemind MPC nodes agree to run a query or program, it cannot be run.

This also means that we have achieved our third goal - that no single server can recover secret data or run any queries on it. Therefore, we have achieved all the goals we set out to achieve and we can build services that collect data while keeping it safe from access and unauthorised use in the future.

If you want to know more how Sharemind has backed companies and organisations around the world in secure processing of business data and how it could help you, take a look at our blog.

Written by Dan Bogdanov