Pleak is an open source tool for analysing the privacy in business processes and pointing out possible leakages. Any collaborative data processing or communication is likely to reveal some information, otherwise, the exchange would be meaningless.

However, in many cases when faced with sensitive information, it is very important to have a clear understanding about what is leaked to who. If the answer to the “what” is complicated then the leakage needs to be measured in terms of the stakes or private inputs of the parties. Pleak is the exact tool to study, document, and communicate such privacy risks that arise from the processes as they are designed.

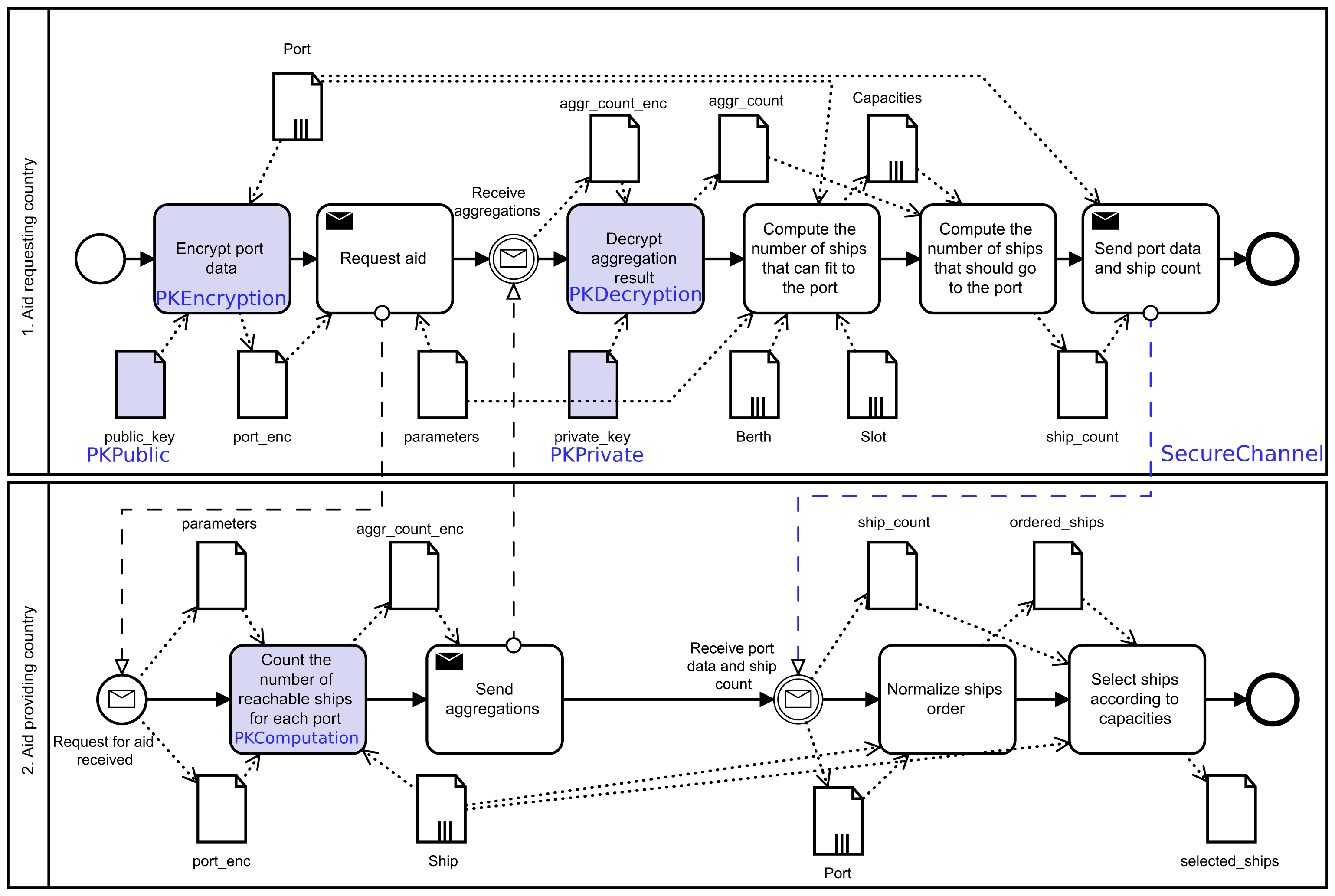

Using Pleak starts with modelling the business processes in Business Process Model and Notation (BPMN) language. BPMN models should be human readable and focus on concrete subprocess at a time so that their correctness can be verified by the subject matter experts. However, analysing privacy leakages from the process requires a bigger picture of how different processes interact with each other. With its composition tool, Pleak bridges the gap between human readability and extensive details needed for formal analysis. This tool enables the analyst to easily combine human readable models into an analysable model. It is especially valuable when different parts of the process are verified by different experts who focus on their specific part, and Pleak brings the parts together to one comprehensive analysis. For example, by joining the processes of the countries providing and requesting aid to the following aid provision process.

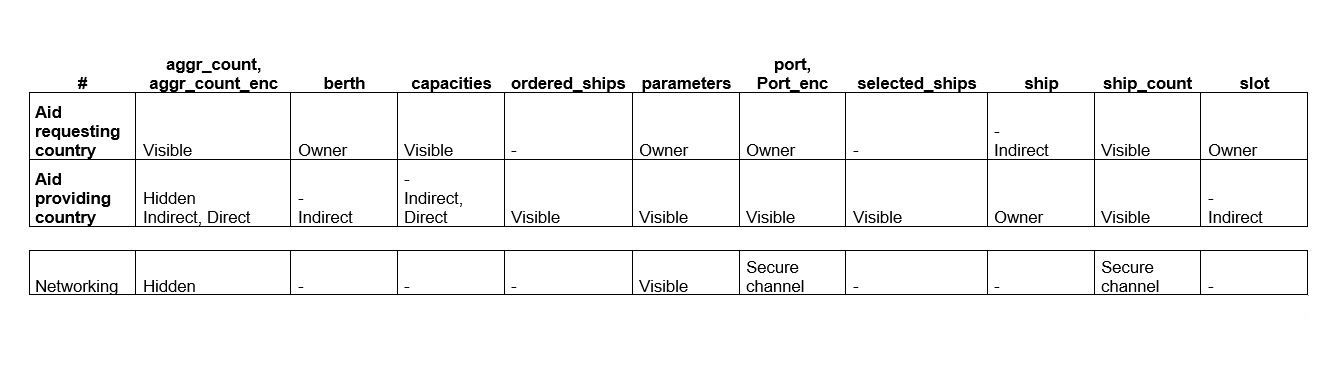

The first layer of Pleak's analysis - the Boolean level of who sees what in the process - can already be carried out on the pure BPMN model. However, this very often means that we see leakages that are really avoided by some technical means, e.g. secure network channels. Hence, Pleak uses privacy-enhanced BPMN (PE-BPMN) that allows to specify different data protection means that are applied. In PE-BPMN, some tasks are specified as tasks of some privacy preserving technology - e.g. a task for encryption and a task for decryption. PE-BPMN model is a good basis for a more thorough look at the privacy of the system. Our automated analysis outputs a table that summarises which data is seen by which party. For everything a party sees, we also analyse which inputs of the process might affect the view of the party. For example, the following table shows that the ship data belongs to the aid provider. The aid provider also sees (marker Visible) information about ports, but for aggregated count, it only sees the ciphertext (marker Hidden). It can also see that the aid requester sees something indirectly derived from the ship data object. Moreover, some data is sent over the network, for example, parameters are sent visibly over the network, but port data is sent over a secure network channel.

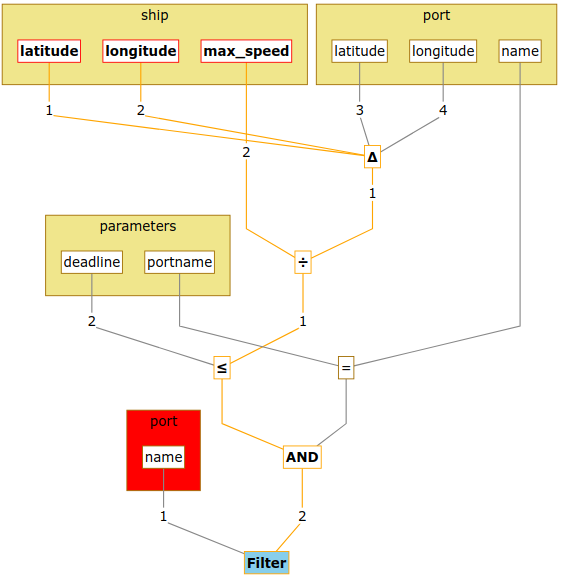

Essentially, this layer of analysis is for understanding that some things cannot leak to some parties. However, for everything that is in any way accessible to a party, we should dig deeper. In our example, it would be natural if the aid providing country wants to find out which data about the ship is leaked to the aid requesting country. We have devised two flavours of leaks-when analysis to get a deeper understanding of the conditions of potential leakages. Using them requires the analyst to specify the actual content of the data processing. The tasks are specified using either SQL scripts or Pleak’s pseudocode. For SQL queries, the semantics is very clear and, therefore, the analysis of the process combines and simplifies the queries to present the user with graphs of the computation. For each field in the data object, we can see exactly which fields of input data are used to derive it, and how (the leaks or first branch part of the analysis). In addition, we can also see all the conditions that must hold in order for the specific value to appear in the output (aka the “when” part of the analysis). Our own pseudocode offers more freedom for the user to specify different flavours of processes. In BPMN leaks-when analysis, the focus is on the decision points in the process and on operations that perform any sort of filtering. Hence, the output shows for each of its data field, which predicates need to hold for the input data to affect the output. It also shows which filters have been applied on the inputs before they may flow to the output. For our example, leaks-when analysis sees that the ship’s location and maximum speed are used in the condition branch of the computations for aggregated count. In this process this seems a reasonable leakage, because we should only consider ships that have a chance of providing aid within a desired deadline.

Leaks-when analysis offers the user more insight to the structure of the computations than a simple BPMN model could. However, after leaks-when analysis, the question remains if the chosen filters or performed computations are sufficient to limit the potential leakage. Pleak's third layer of analysis works on quantifying the leakage either in terms of sensitivity of the process or the adversary's advantage of guessing some private input based on observing the outputs of the process. These analyses are usually applicable when the outputs of a workflow are presented in numeric values and most analysis in this category benefit from having access to the actual data.

Sensitivity tells us how much the output of the process may change given some small change in the input - either a change in the value, or adding or removing rows in input tables. On the other hand, the guessing advantage analysis allows the user to specify questions about how much does the adversary gain from seeing some of the process outputs when the goal of the adversary is to guess something about the inputs. In our example, we may consider an adversary that is trying to guess the location of a ship. Currently, there is nothing that a network observer can see, but it is a justified question if sending the ship count over the secure network channel is really essential. The guessing advantage allows us to ask whether we could protect the approximate location of a ship, so that the adversary’s probability of guessing it correctly would not increase too much. For example, if we allow 30% of improvement at most, the analysis tells us that we have to expect about 15% of noise when using differential privacy. Hence, we could drop using the secure channel if this noise and increase in guessing probability is acceptable.

For more details about Pleak see pleak.io and pleak.io/wiki. The model used as an example can be found at https://pleak.io/app/#/view/HHQEWDWiweWX2HOmTGU4. It is possible to run all the described analysis on this model using appropriate analysers.

Written by Pille Pullonen