Deploying secure multiparty computation techniques is seldom a straightforward replacement of how things are done at the moment and enable collaborations considered impossible so far. It is a complex process as privacy requirements, and technological solutions are deeply interleaved. Hence, we need tools to weigh the options and discuss changes in a process in an easily understandable manner.

Pleak

Pleak does exactly this, by both visualizing and analyzing the processes. Specifically, Pleak is a web application to study the effect of privacy enhancing technologies, such as secure computation, in the context of the desired business process. The starting point for Pleak is creating the model of the desired computations in well known business process model and notation (BPMN). The next steps enhance the model with information about the deployed privacy enhancing technologies using Privacy-Enhanced BPMN (PE-BPMN) notation to study the privacy properties of the model.

Satellite Collision Analysis

Consider the case where Sharemind is used to estimate satellite collision probabilities. There are two nations that both have knowledge about their satellites and they would like to find probabilities that any of their satellites will collide. Without sharing their data, they can simply try to find this out based on their own knowledge but using Sharemind enables them to collaborate while protecting their secrets.

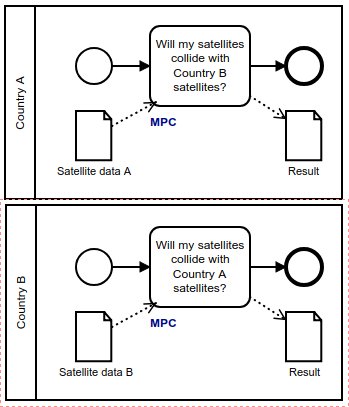

Figure 1: Simple satellite collision model on Sharemind (click on the image to open the model in Pleak)

For example, we can create a simple satellite collision model as having secure multiparty computation (MPC) tasks as in Figure 1. Privacy wise, this small model already specifies what we need – the result needs to be computed securely and the parties should only learn the collision risks and nothing more about each others inputs. But what do the nations have to do to deploy Sharemind in this setting? The general idea is that the participants need to find a way to share their data with some form of protection that also offers them capabilities to compute the desired result. The two ways that Sharemind tackles the issue are secret sharing, using SecreC language, and secure hardware.

Sharemind MPC

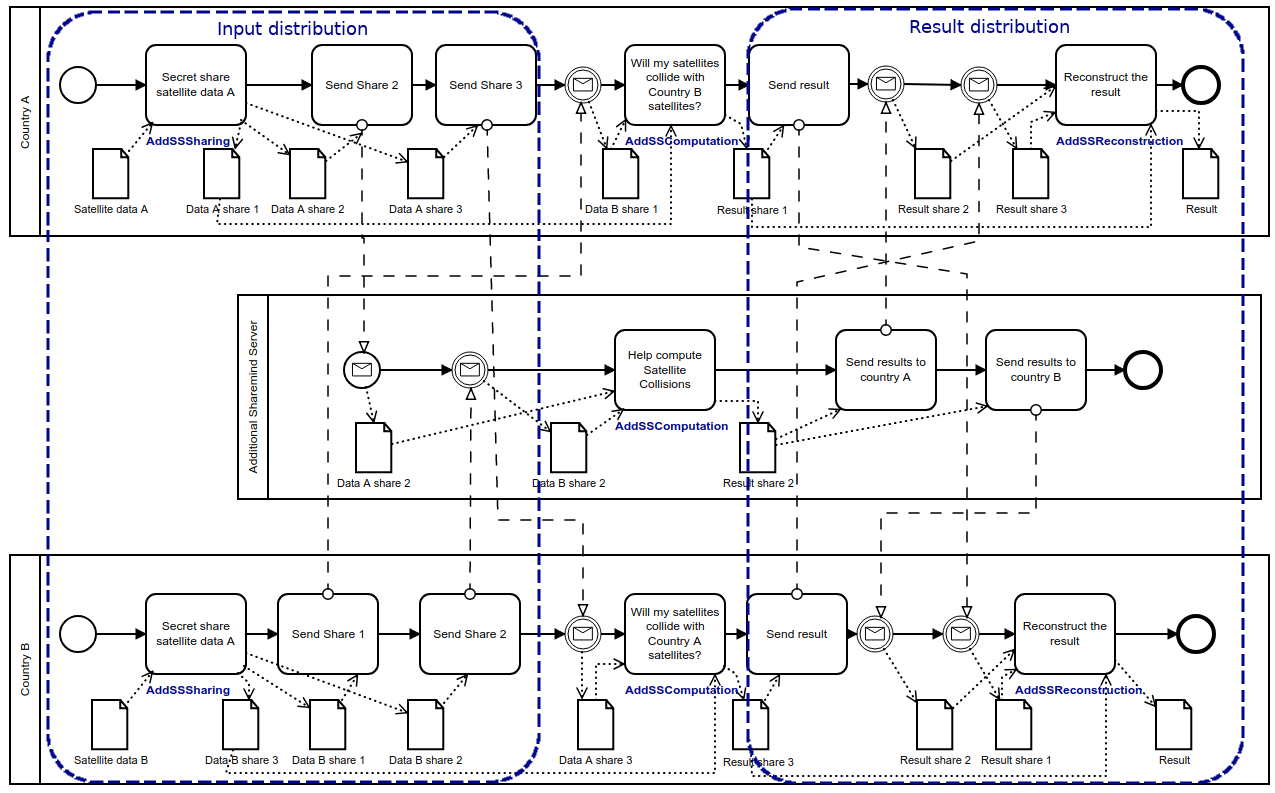

Figure 2: Detailed satellite collision model on Sharemind MPC (click on the image to open the model in Pleak)

It is reasonable to assume that both will deploy one Sharemind server on their own to retain maximum control of the computations and the third can be run by some neutral party. Both countries need to secret share their inputs and distribute the shares in the Sharemind data upload process. They use Sharemind to compute the collision probabilities and finally execute the publishing step to learn the output from the shares as in Figure 2. The PE-BPMN notation specifies the privacy-aware tasks that each party needs to carry out, such as sharing its data and participating in the computations.

Sharemind HI

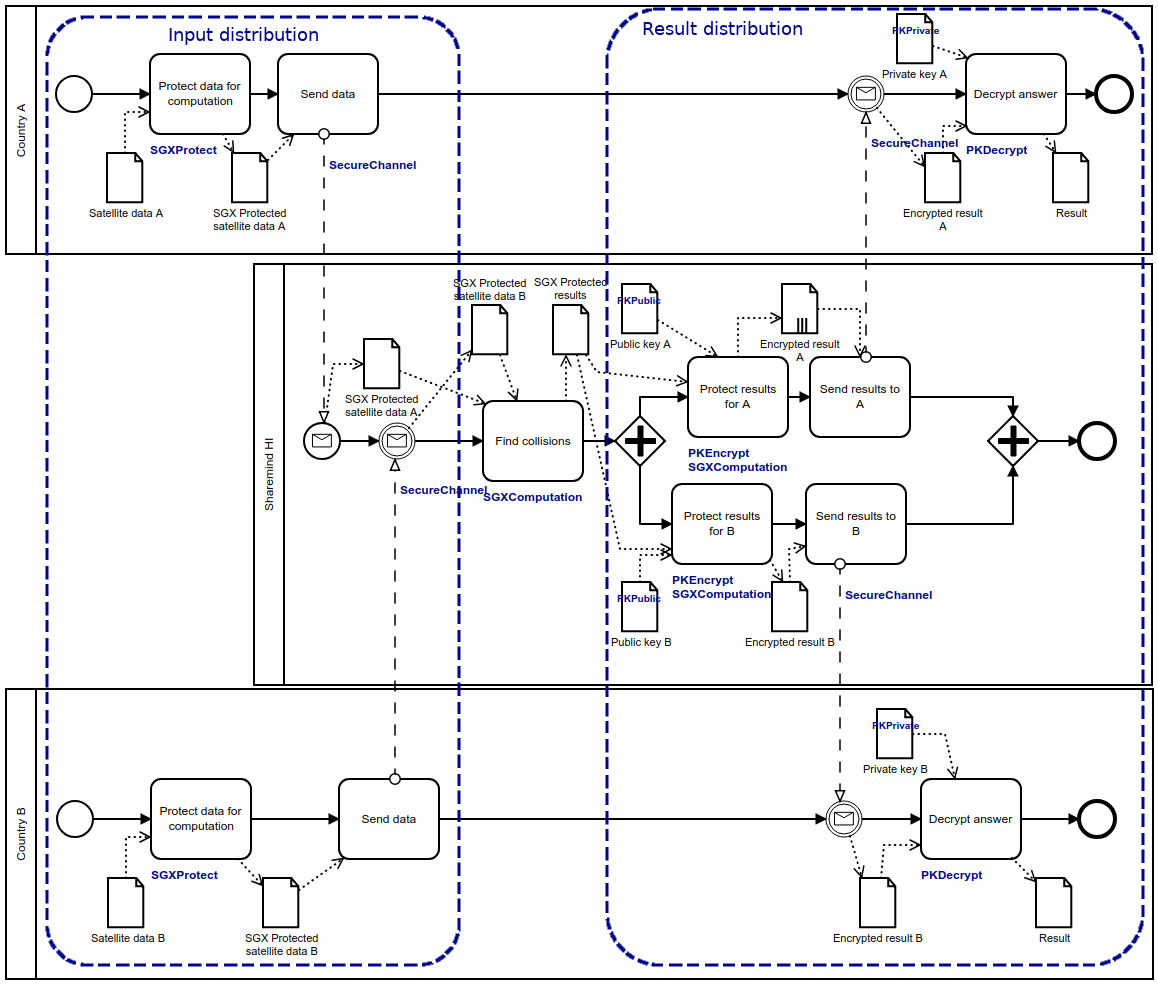

Figure 3: Detailed satellite collision model on Sharemind HI (click on the image to open the model in Pleak)

The same task can be tackled by Sharemind HI where it is upon the nations to send their inputs to the HI server. Here, the countries themselves do not participate in the computation, but they still need to partake in the uploading of encrypted data and still need to receive the encrypted results. This results in Figure 3 where the Sharemind HI server performs the collision analysis and encrypts the result for both nations. As before, PE-BPMN specifies the tasks that deal with data protection or operating on protected data.

PE-BPMN privacy analysis

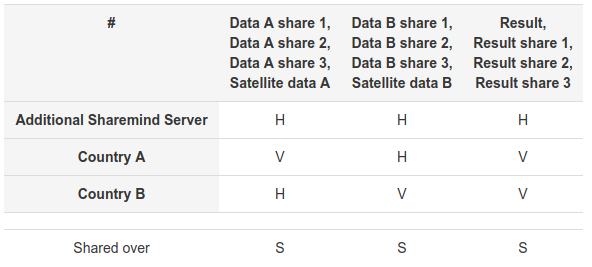

Figure 4: Disclosure table for satellite collision analysis on Sharemind MPC

The models help the nations to understand their role in either of the two deployments. However, concrete technologies make the models significantly more complex than the conceptual goal of using MPC, so steps need to be taken to ensure that the resulting process is still privacy preserving. Pleak's automated disclosure reports convince us that in this case the deployments achieve the desired level of privacy. The disclosure reports show which party (in rows) has access to which data (in columns) and if any form of data protection is applied to the data. For example, Figure 4 has the disclosure table for the Sharemind MPC case. The marker V (for visible) means that each party sees its input and the desired result. All data marked with H (for hidden) stands for data objects that the parties have but where the contents are protected using a cryptographic tool, e.g. secret sharing and are not in a risk of being leaked. For example, neither party learns the input of the other nation. The row “shared over” indicates that all network communication is designed to use a secure network channel hence ensuring privacy against network eavesdroppers. The parties view of the private data remains the same as in the conceptual case and the disclosure reports hide the complexity of the actual process. Therefore, the nations can see that the privacy is guaranteed even when not studying the technologies and the process of other stakeholders in detail.

Pleak generates the disclosure tables from PE-BPMN models, for example, the one for Sharemind HI can be seen by opening PE-BMPN of performing collision analysis on Sharemind HI, clicking “Validate” and choosing “Analyze” under simple disclosure analysis. The output shows that the view of the parties is similar - still seeing their inputs and the result. In addition, each party knows their private key and the Sharemind HI server has the respective public keys.

Summary

The technology specific models are used to discuss the deployment. Analysis tables summarize the process and draw attention to the data objects with a privacy risk. It is up to the user to decide if the indicated disclosure is acceptable. Pleak can help with studying data dependencies to better evaluate the risk. In addition to PE-BPMN process models, Pleak offers quantitative and qualitative leakage analysis. Pleak's multi-layered toolset visualizes privacy aspects of the process using a well known notation and makes the impact of selected techniques easily understandable. For more information see Pleak wiki.